MIWAI 2024 - HYBRID CONFERENCE / Virtual attendance & Face to face

The 17th International Conference on Multi-disciplinary Trends in Artificial Intelligence

Download CFP ABOUT MIWAI

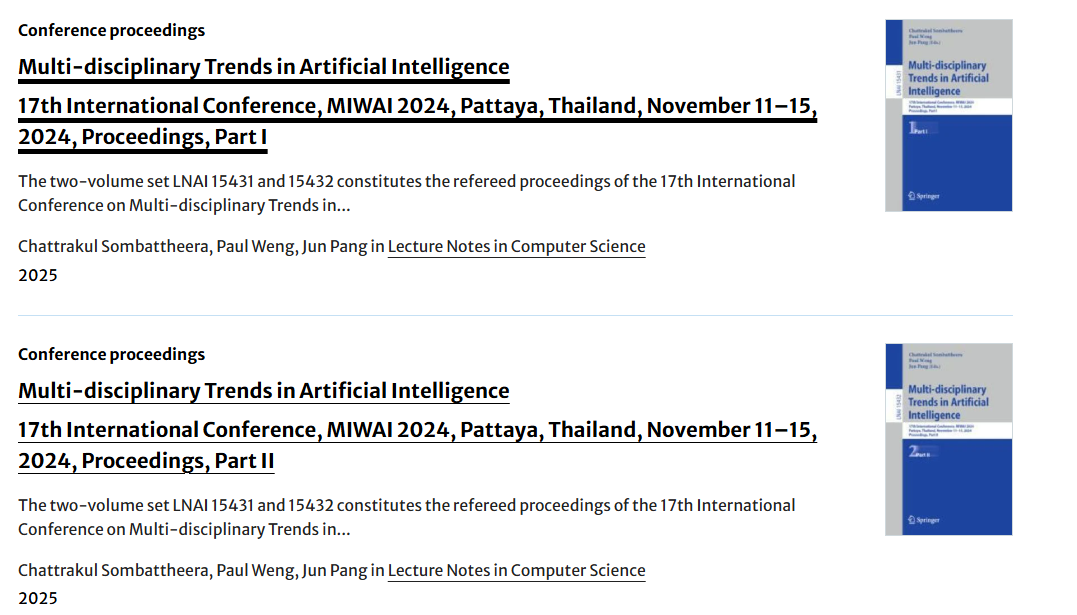

We are pleased to announce that the official proceedings of the 17th International Conference on Multi-disciplinary Trends in Artificial Intelligence (MIWAI 2024) are now available. Conference participants will receive complimentary access to these proceedings for a limited period of 4 to 6 weeks and can access them through the following links:

Part I: https://link.springer.com/book/10.1007/978-981-96-0692-4

Part II: https://link.springer.com/book/10.1007/978-981-96-0695-5

To ensure a smooth experience during MIWAI 2024, we’ll be hosting a test session for all attendees to check their audio, video, and connectivity settings. This is a chance to make sure everyone is ready and troubleshoot any issues in advance. If the Zoom application hasn't been installed on your PC or Mac, please go to https://zoom.us/download the prompts to complete the installation. You may need to enter your PC/Mac permission to allow installation.

| 🛠 Test Session Details | |

|---|---|

| Date: | TUESDAY, NOVEMBER 12, 2024 |

| Time: | @10:00-16:00 UTC+7 (Bangkok, Thailand time.) |

| Zoom Link: |

Join Test Session

Meeting ID: 793 9829 2507 Passcode: 6DL3pP |

Please join the session to ensure everything runs smoothly for the main event. We appreciate your cooperation and look forward to an amazing MIWAI 2024!

All dates and times are in Bangkok, Thailand

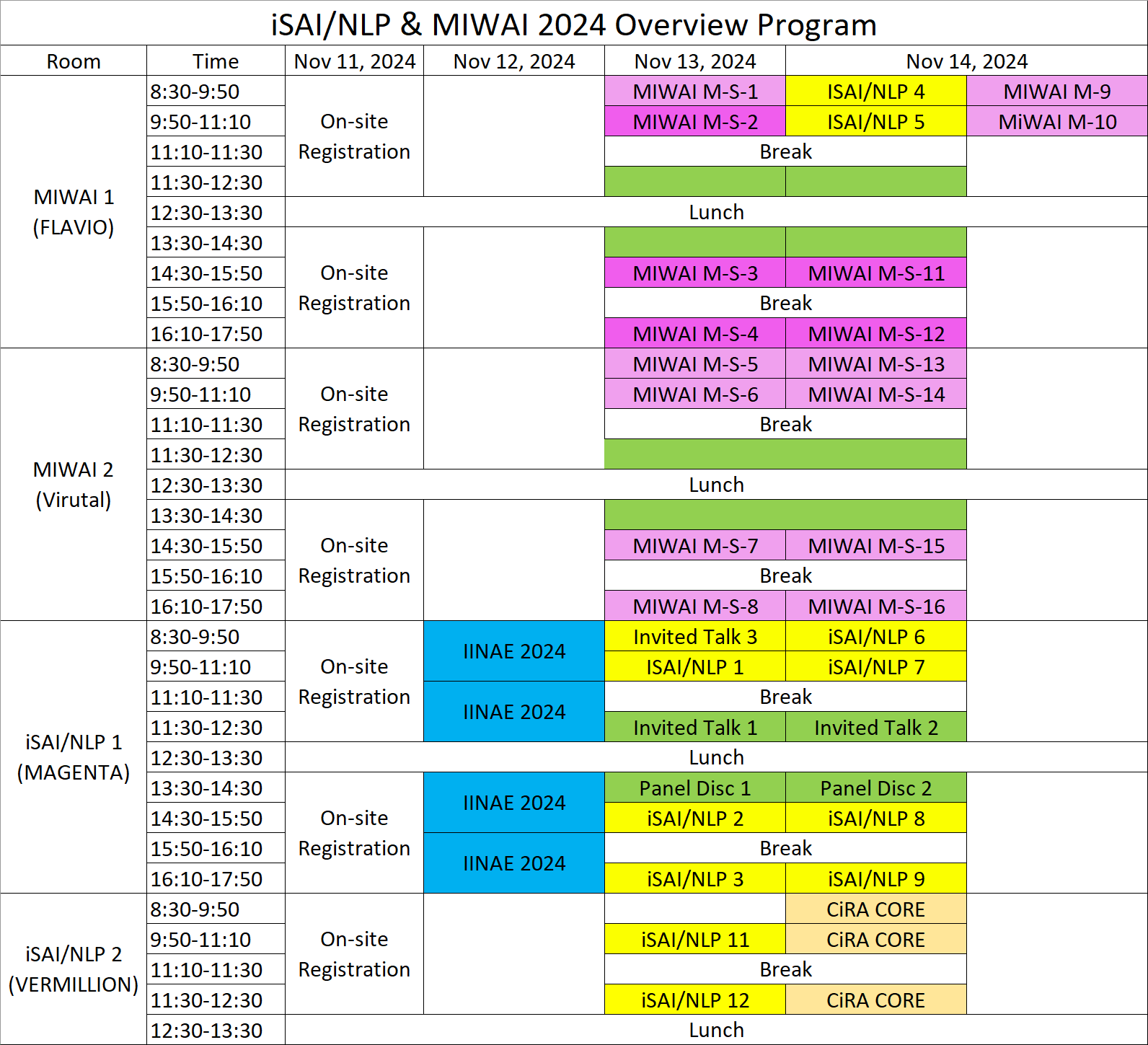

We’re excited to announce the **MIWAI 2024 Conference**! This year’s event will feature insightful discussions, presentations, and networking opportunities in the field of artificial intelligence and cognitive science. Join us virtually to connect with experts and enthusiasts from around the world.

| 🛠 Conference Details | |

|---|---|

| Date: | Wednesday, November 13 - Thursday, November 14, 2024 |

| Time: | Starting at 08:30 - 18:00 UTC+7 (Bangkok, Thailand time) |

| Zoom Link: |

Join MIWAI 2024 Conference

Meeting ID: 878 3313 5517 Passcode: 296341 |

We look forward to seeing you there and sharing an inspiring MIWAI 2024 experience together!

| Invited Talk 1 | Speaker | Asst. Prof. Supachai Vongbunyong, Ph.D. |

|---|---|---|

| Title | Artificial Intelligence in Sustainable Manufacturing and Industry 5.0 | |

| Abstract | Nowadays the world has encountered many key challenges such as aging populations, resource efficiency, and mass customization. Addressing these key challenges, this presentation explores AI's potential to transform manufacturing through increased sustainability, efficiency, and adaptability, all within the framework of a human-centered Industry 5.0 paradigm. In regard to AI technologies, they are implemented in various domains in manufacturing. Generative design enables AI-driven product innovation, while advanced machine vision improves inspection and material handling processes. AI applications in intralogistics and energy management contribute to streamlined operations and cost reductions, enhancing overall efficiency. In addition, human-robot collaboration is highlighted as a means to improve worker satisfaction by assigning repetitive or hazardous tasks to machines. For Generative AI, it is recognized as a high-value tool in manufacturing, capable of automating complex processes like test case generation, document creation, and production monitoring. Benefits of AI in this sector include continuous production, enhanced quality control, real-time decision-making, and reduced operational costs, all of which align with sustainable manufacturing goals. | |

| Invited Talk 2 | Speaker | Prof. Patrick Doherty, Ph.D. |

| Title | Collaborative Robotics for Emergency Rescue: A Distributed Task, Information, and Interaction Perspective | |

| Abstract | In the context of collaborative robotics, both distributed planning and task allocation, and acquisition of situation awareness are essential for supporting goal achievement, collective intelligence, and decision support in teams of robots and human agents. This is particularly important in applications pertaining to emergency rescue and crisis management. Given a high-level mission specification provided by a member of a rescue team, human or robotic, one then requires a mechanism for generating and executing complex, multi-agent distributed plans and tasks. The proper task representation is essential for both the generation and execution of complex multi-agent distributed tasks. Task Specification Trees have been proposed for this purpose and a Delegation Framework is used for distributed task allocation. Additionally, during operational missions, data and knowledge is gathered incrementally and in different ways by teams of heterogeneous robots and humans. We describe this as the formation and management of Hastily Formed Knowledge Networks (HFKN). The resulting distributed knowledge structures can then be queried by individual agents for decision support. These structures are represented as RDF graphs, and graph synchronization techniques are introduced to retain the consistency of the collective knowledge of a team. Flexible human interaction with teams of robots is also an essential component in emergency rescue. Integrating LLMs into the interaction process provides a new way to think about interaction. | |

| Panel Discussion 1 | Topic | Toward Industry 4.0 with AI |

| Panel Discussion 2 | Topic | Toward Advanced Defense with AI |

Artificial Intelligence (AI) research has broad applications in real world problems. Examples include control, planning and scheduling, pattern recognition, knowledge mining, software applications, strategy games an others. The ever-evolving needs in society and business both on a local and on a global scale demand better technologies for solving more and more complex problems. Such needs can be found in all industrial sectors and in any part of the world.

The International Conference on Multi-disciplinary Trends in Artificial Intelligence (MIWAI), formerly called The Multi-disciplinary International Workshop on Artificial Intelligence, is a well established scientific venue in the field of artificial Intelligence. MIWAI was established more than 16 years. This conference aims to be a meeting place where excellence in AI research meets the needs for solving dynamic and complex problems in the real world. The academic researchers, developers, and industrial practitioners will have extensive opportunities to present their original work, technological advances and practical problems. Participants can learn from each other and exchange their experiences in order to fine tune their activities in order to help each other better. The main purposes of the MIWAI series of conferences are:

Artificial intelligence is a broad area of research.

We

encourage researchers to submit papers in the following areas but not limited to:

Submission link: https://www.easychair.org/conferences/?conf=miwai2024

Both research and application papers are solicited. All submitted papers will be carefully peer-reviewed on the basis of technical quality, relevance, significance, and clarity.

Each paper should have no more than twelve (12) pages in the Springer-Verlag LNCS style. The authors' names and institutions should not appear in the paper. Unpublished work of the authors should not be cited. Springer-Verlag author instructions are available at: https://www.springer.com/gp/computer-science/lncs/conference-proceedings-guidelines

The authors of each accepted paper must upload the camera ready version of the paper to MIWAI 2024’s submission website via Easychair by September 25, 2024 at 23:59 UTC-12. The camera ready version includes:

The authors of each accepted papers must send us a signed copyright form. One author may sign on behalf of all of the authors of a particular paper. The copyright form must be present and correct. In first three fields of the form, insert the following information:

The copyright form can be accessed here.

Each accepted paper should have registration fee paid by at least one of the authors by September 25, 2024 at 23:59 UTC-12 in order to include the paper in the LNAI proceedings. The fee details are given in the table below.

Important Note: At least one author of each accepted paper must register for the conference in order for their paper to be included in the LNAI. Additional (co)authors of that paper may also register if they wish to.

The early registration fee (Early-bird or before September 30, 2024) and the late registration fee (between October 1, 2024 and Novermber 11, 2024) is given in the table below.

In order to qualify to pay the "Participant" registration fee, the person planning to attend the conference must be a full-time student or a participant in an accredited institute. You need to bring the student status form (original copy) and present it while collecting the material at the registration desk in Pattaya, Thailand.

The registration fee will allow you to attend the conference and any other services that we are planning to provide during the conference. There will be morning and afternoon breaks and lunches on November 13-15. The reception dinner on the evening of November 14th (TBC) will also be covered. Registered authors will have access to the online version of the LNAI proceedings before and during the conference.

Note: The conference organizers WILL NOT be responsible for missing payments and/or any other problems related to the payments. The registration fee is non-refundable.

All payment deadlines are in the UTC-12 timezone.

| Type of Authors | Type of Registration | Registration Fees (Pay methods are supported: Credit card and QR payment(only Thailand)) |

|

|---|---|---|---|

|

Early-bird (USD)

Before September 25, 2024 |

On-Spot (USD)

Between October 1, 2024 and November 11, 2024 |

||

| Presentation | Online and Virtual |

250

Register & Pay now |

- |

| On-site |

500

Register & Pay now |

650 | |

| Participant | On-site |

200

Register & Pay now (For non-authors) Before September 30, 2024 |

250 |

All deadlines are in UTC-12 timezone.

| Event | Date |

|---|---|

| Submission Deadline | |

| Notification Deadline | |

| Camera Ready Deadline | |

| Registration Deadline | |

| Conference Dates | November 11-15, 2024 |

University of Linkoping, Sweden

King Mongkut's University of Technology Thonburi, Thailand

In the context of collaborative robotics, both distributed planning and task allocation, and acquisition of situation awareness are essential for supporting goal achievement, collective intelligence, and decision support in teams of robots and human agents. This is particularly important in applications pertaining to emergency rescue and crisis management. Given a high-level mission specification provided by a member of a rescue team, human or robotic, one then requires a mechanism for generating and executing complex, multi-agent distributed plans and tasks. The proper task representation is essential for both the generation and execution of complex multi-agent distributed tasks. Task Specification Trees have been proposed for this purpose and a Delegation Framework is used for distributed task allocation. Additionally, during operational missions, data and knowledge is gathered incrementally and in different ways by teams of heterogeneous robots and humans. We describe this as the formation and management of Hastily Formed Knowledge Networks (HFKN). The resulting distributed knowledge structures can then be queried by individual agents for decision support. These structures are represented as RDF graphs, and graph synchronization techniques are introduced to retain the consistency of the collective knowledge of a team. Flexible human interaction with teams of robots is also an essential component in emergency rescue. Integrating LLMs into the interaction process provides a new way to think about interaction.

In this talk, I will present both the HFKN and Delegation Frameworks, their integration, and in addition describe various field robotic experiments with UAVs which use the overall system. I will also show some initial work that uses LLMs in the interaction process. If time allows, I will also discuss a Swedish national project where this framework has been used by both industrial and academic partners in large public safety scenarios using UAVs, USVs, and AUVs in maritime and sea rescue scenarios.

Patrick Doherty is a Professor of Computer Science at the Department of Computer and Information Sciences (IDA), Linköping University, Sweden. He leads the Artificial Intelligence Lab at IDA. He is an ECCAI/EurAI fellow, a AAIA fellow, and a member of ACM and AAAI. He previously served as Editor-in-Chief of the Artificial Intelligence Journal. He has over 30 years of experience in areas such as knowledge representation and reasoning, automated planning, intelligent autonomous systems, and multi-agent systems. A major area of application is with Unmanned Aircraft Systems (UAS). He has over 200 refereed scientific publications in his areas of expertise and has given numerous keynote and invited talks at leading international conferences.

Nowadays the world has encountered many key challenges such as aging populations, resource efficiency, and mass customization. Addressing these key challenges, this presentation explores AI's potential to transform manufacturing through increased sustainability, efficiency, and adaptability, all within the framework of a human-centered Industry 5.0 paradigm. In regard to AI technologies, they are implemented in various domains in manufacturing. Generative design enables AI-driven product innovation, while advanced machine vision improves inspection and material handling processes. AI applications in intralogistics and energy management contribute to streamlined operations and cost reductions, enhancing overall efficiency. In addition, human-robot collaboration is highlighted as a means to improve worker satisfaction by assigning repetitive or hazardous tasks to machines. For Generative AI, it is recognized as a high-value tool in manufacturing, capable of automating complex processes like test case generation, document creation, and production monitoring. Benefits of AI in this sector include continuous production, enhanced quality control, real-time decision-making, and reduced operational costs, all of which align with sustainable manufacturing goals.

Asst. Prof. Supachai Vongbunyong, PhD, is a Director of Institute of Field Robotics (FIBO), King Mongkut's University of Technology Thonburi, Bangkok, Thailand. He is a founder a Hospital Automation Research Center (HAC@FIBO), co-founder of Famme Works Co., Ltd, and Innovation and Advanced Manufacturing Research Group. He received PhD in Manufacturing Engineering and Management from The University of New South Wales (UNSW), Australia, Master and Bachelor Degrees in Mechanical Engineering from Chulalongkorn University, Thailand. His research expertise is robotics and automation in industrial, medical, and healthcare applications. Some of this highlighted works are CARVER, autonomous mobile robots for hospital logistics. He published more than 50 academic articles in international journals and conference proceedings. In addition, he was awarded, "TMA Young Technologist Award 2021” (Runner-up), "KMUTT Rising Star Researcher Awards 2021", and "Australia Alumni Innovation Award 2024".

Principal Applied Scientist, Microsoft

University of Mysore, India

Murdoch University, Australia

I am a Principal Applied Scientist at Microsoft India R&D Private Limited at Hyderabad, India. I am also an Adjunct Faculty at International Institute of Information Technology, Hyderabad, and a visiting faculty at ISB, Hyderabad. I received my Masters in Computer Science from IIT Bombay in 2007 and my Ph.D. from the University of Illinois at Urbana-Champaign in 2013. Before this, I worked for Yahoo! Bangalore for two years. My research interests are in the areas of deep learning, natural language processing, web mining and data mining. I have published more than 100 research papers in reputed referred journals and conferences. I have also co-authored two books: one on Outlier Detection for Temporal Data and another one on Information Retrieval with Verbose Queries.

D.S. Guru received the BSc, MSc, and PhD degrees in computer science and technology from the University of Mysore,

India, in 1991, 1993, and 2000, respectively. He is currently a reader in the Department of Studies in

Computer Science, University of Mysore, India. He was a fellow of BOYSCAT.

He was a visiting research scientist at Michigan State University. He is supervising a couple

of major projects sponsored by the UGC, the DST, and the Government of India. He has authored 25

journals and 124 peer-reviewed conference papers at international and national levels. His area of

research interest covers image retrieval, object recognition, shape analysis sign language recognition,

biometrics, and symbolic data analysis. He is a life member of Indian professional bodies such as the CSI,

the ISTE, and the IUPRAI. He is a founder trustee of the Maharaja Education Trust,

Mysore, which is establishing academic institutions in and around Mysore.

Full Bio: Download

Emeritus Professor Chun Che (Lance) Fung, IEEE R10 Director (2023-2024), was trained and worked as a Marine Radio and Electronic Officer in Hong Kong during the 70’s. He graduated with a Bachelor of Science Degree with First Class Honours and a Master of Engineering Degree from the University of Wales in the early 80’s. His Ph.D. Degree was awarded in 1993 by the University of Western Australia, with a thesis on Artificial Intelligence and Power System Engineering, under the supervision of late Professor Kit Po Wong. Apart from serving in the merchant navy, Lance has taught at Singapore Polytechnic (1982-1988), Curtin University (1989-2003), and Murdoch University from 2003 where he was appointed Emeritus Professor in 2015. His roles included Associate Dean of Research, Postgraduate Research Director, and Director of the Centre for Enterprise Collaborative in Innovative Systems. He has supervised to completion over 36 doctoral students and published over 398 academic articles in the Murdoch University Research repository. He has over 6100 citations and H-Index of 32 being recorded in Google Scholar. His research interest in the applications of computational intelligence to practical problems. Lance has been a dedicated IEEE member and volunteer for over 30 years in various positions in Chapters, WA Section, Australia Council, Region 10 Executive Committee, the IEEE SMC Society Board of Governors, and IEEE Board of Directors, plus many other IEEE committees. He can be reached at lanceccfung@ieee.org

© Designed and Developed by UIdeck